Building a full-stack spam catching app 2. Backend

#machine-learning

#projects

#python

Written by Matt Sosna on March 14, 2021

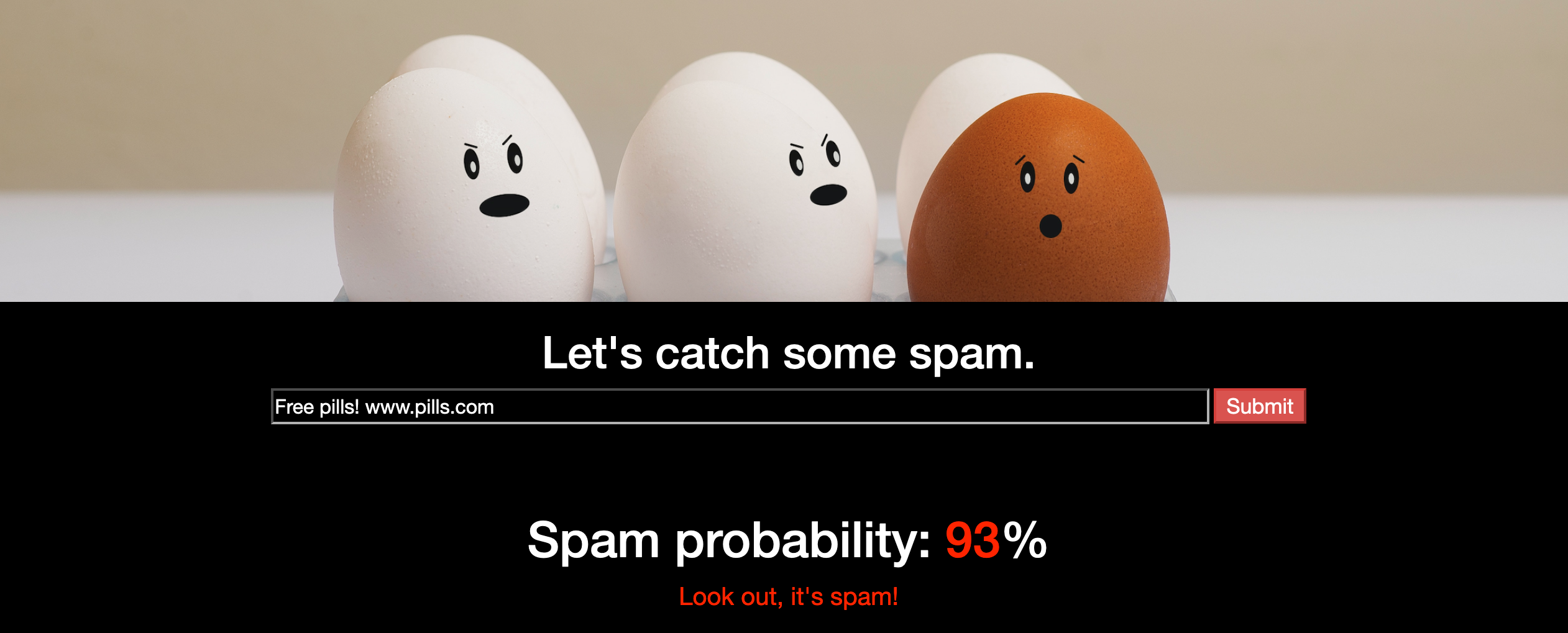

Welcome back! In the last post, we covered the theory for why we use NLP and machine learning for spam classification. In this post, we’ll actually build such a classifier, and we’ll also create a Flask micro web service to make it much easier to interact with our model. In the next post, we’ll connect a sleek frontend to our Flask app so you can use the model without needing to know Python.

If you want to skip ahead, you can check out the actual app here and the source code here. We’ll keep this post simple by excluding a few extra features in the actual app, like a set_model method that loads an existing trained model or an /inspect endpoint that lets us view our model’s accuracy.

Building a full-stack spam classifier

2. Backend

The backend

The core of our app is a Python class called SpamCatcher that has two main components:

- A TF-IDF vectorizer that converts strings to TF-IDF vectors

- A random forest classifier that outputs the probability that a TF-IDF vector is spam

Both components must first be trained before they can output TF-IDF vectors or spam probabilities $-$ the vectorizer needs to learn the frequency of terms in the document vocabulary, and the classifier needs to learn the relationship between TF-IDF vectors and spam.

We’ll therefore take an object-oriented approach and create a SpamCatcher Python class, storing our vectorizer and classifier as instance attributes. These attributes will be referenced repeatedly in different contexts (e.g. training the model vs. generating predictions), so it’ll be convenient to have them in a location any function within and outside SpamCatcher can easily reference.

We start with some imports and define a SpamCatcher class with instance attributes set to None.

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

import logging

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

from sklearn.feature_extraction.text import TfidfVectorizer

class SpamCatcher:

"""Methods for training a ham-spam classifier"""

def __init__(self):

self.tfidf_vectorizer = None

self.model = None

Now let’s write the code that will actually update these attributes. Note that these are all methods of SpamCatcher, so they’re located within SpamCatcher and their first parameter is always self.

The TF-IDF vectorizer

Rather than coding a TF-IDF vectorizer ourselves, we’ll let Scikit-learn’s TfIdfVectorizer do all the hard work for us. There are essentially only two steps we need to worry about: training our vectorizer on the sample text messages (so it learns the vector space of term frequencies across all documents), and generating TF-IDF vectors once the vectorizer is trained.

The method extract_features accomplishes the first goal for us.

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

def extract_features(self,

labels: pd.Series,

docs: pd.Series) -> pd.DataFrame:

"""

| Create dataframe where each row is a document and each column

| is a term, weighted by TF-IDF (term frequency - inverse document

| frequency). Lowercases all words, performs lemmatization,

| and removes stopwords and punctuation.

|

| ----------------------------------------------------------------

| Parameters

| ----------

| labels : pd.Series

| Ham/spam classification

|

| docs : pd.Series

| Documents to extract features from

|

|

| Returns

| -------

| pd.DataFrame

"""

if not self.tfidf_vectorizer:

self.set_tfidf_vectorizer(docs)

# Transform documents into TF-IDF features

features = self.tfidf_vectorizer.transform(docs)

# Reshape and add back ham/spam label

feature_df = pd.DataFrame(features.todense(),

columns=self.tfidf_vectorizer.get_feature_names())

feature_df.insert(0, 'label', labels)

return feature_df

The first step (line 24) checks whether there’s already a vectorizer at self.tfidf_vectorizer. If there isn’t, self.set_tfidf_vectorizer is called. This function initializes a TfidfVectorizer, removes English stop words, and then trains the vectorizer on the documents.[1]

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

def set_tfidf_vectorizer(self,

training_docs: pd.Series) -> None:

"""

| Fit the TF-IDF vectorizer. Updates self.tfidf_vectorizer

|

| --------------------------------------------------------

| Parameters

| ----------

| training_docs : pd.Series

| An iterable of strings, one per document, to use for

| fitting the TF-IDF vectorizer

|

|

| Returns

| -------

| None

"""

self.tfidf_vectorizer = TfidfVectorizer(stop_words='english')

self.tfidf_vectorizer.fit(training_docs)

return None

The next step in extract_features (line 28) uses self.tfidf_vectorizer to transform each document into a TF-IDF vector. We then turn the output into a dataframe and insert a column for the “ham” vs. “spam” labels.

The classifier

The second core piece of SpamCatcher is the classifier that takes in a TF-IDF vector and outputs a probability of spam. Like the TF-IDF vectorizer, there are two main roles: 1) training our model, and 2) using it to classify messages.

We start with the aptly-titled train_model. This function takes in a dataframe where the first column is the spam/ham labels and the remaining columns are the elements of each document’s TF-IDF vector. Setting X to be the second column onward (line 20) is convenient, as none of this code needs to change if we update our training set and change the number of terms in our vocabulary.

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

def train_model(self,

df: pd.DataFrame) -> None:

"""

| Train a random forest classifier on df. Assumes first column

| is labels and all remaining columns are features. Updates

| self.model, self.accuracy, and self.top_features

|

| ------------------------------------------------------------

| Parameters

| ----------

| df : pd.DataFrame

| The data, where first column is labels and remaining columns

| are features

|

|

| Returns

| -------

| None

"""

X = df.iloc[:, 1:]

y = df.iloc[:, 0]

# Set spam as target

y.replace({'ham': 0, 'spam': 1}, inplace=True)

X_train, X_test, y_train, y_test = train_test_split(X, y)

rf = RandomForestClassifier(n_estimators=100)

rf.fit(X_train, y_train)

self.model = rf

return None

In line 24 we manually specify that our target class is spam. (Pro tip for avoiding mysterious bugs where your model is actually predicting the opposite class!) And in lines 26 onward, we split our data into training and testing, fit our classifier, and then update the model attribute of our class.

Now let’s bring the previous steps together with a load_and_train method. This method takes in a CSV of training data, calls extract_features to turn the strings into TF-IDF vectors, then feeds the processed data into train_model.

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

def load_and_train(self,

data_path: str) -> None:

"""

| Main method for class. Instantiates self.model with random forest

| classifier trained on CSV at data_path.

"""

raw_df = pd.read_csv(data_path)

logging.debug("Extracting features")

clean_df = self.extract_features(raw_df['label'], raw_df['text'])

logging.debug("Training model")

self.train_model(clean_df)

logging.debug("Model training complete")

return None

The final main function in SpamCatcher is what actually predicts whether a text message is spam. classify_string takes in a string text message, then converts the string to a TF-IDF vector. It’s critical that this vector has the same features our model was trained on, so we use the same TfIdfVectorizer instance that was used to create our training set. This is one major advantage of object-oriented programming; we can easily refer to both this original vectorizer and our random forest classifier because they’re attributes of SpamCatcher.

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

def classify_string(self,

text: str) -> float:

"""

| Get the probability that a string is spam. Transforms the

| string into a TF-IDF vector and then returns self.model's

| prediction on the vector.

|

| ---------------------------------------------------------

| Parameters

| ----------

| text : str

| A raw string to be classified

"""

if not self.tfidf_vectorizer:

raise ValueError("Cannot generate predictions; must first "

" set self.tfidf_vectorizer")

vec = self.tfidf_vectorizer.transform([text])

return self.model.predict_proba(vec)[0][1]

Classifier summary

Congrats! We’ve just built our machine learning spam classifier. To review, here’s a schematic for what our SpamCatcher class looks like. (For the full code plus some extras not covered above, check out the file here.)

1

2

3

4

5

6

7

8

9

10

11

class SpamCatcher:

<attributes>

- TF-IDF vectorizer

- Random forest model

<methods>

- load_and_train

- extract_features

- set_tfidf_vectorizer

- train_model

- classify_string

At this point, we can use SpamCatcher to generate predictions. If you’re curious, create a folder with the training data CSV, the file that contains SpamCatcher, and demo.py, a new Python file.

1

2

3

4

demo_folder/

| spam_catcher.py

| demo.py

| data.csv

In demo.py, we’ll simply import SpamCatcher, train the model on data.csv, and then print out predictions on two strings.

| Python |

1

2

3

4

5

6

7

8

9

10

11

# demo.py

from spam_catcher import SpamCatcher

sc = SpamCatcher()

sc.load_and_train("data.csv")

hello_perc = round(100*sc.classify_string('Hello'))

urgent_perc = round(100*sc.classify_string('Urgent!'))

print(f"Probability 'Hello' is spam: {hello_perc}%")

print(f"Probability 'Urgent!' is spam: {urgent_perc}%")

To actually run the code, open a Terminal window, navigate to demo_folder, and type python demo.py.[2] Note that it’ll take a minute since the model first needs to be trained.

| bash |

1

2

3

4

5

6

> python demo.py

DEBUG:root:Extracting features

DEBUG:root:Training model

DEBUG:root:Model training complete

Probability 'Hello' is spam: 0%

Probability 'Urgent!' is spam: 40%

Great! However, we’re still pretty limited in how we can interact with our model. It’d be a lot nicer if we could generate predictions from a Python script that’s not in the same folder as spam_catcher.py, or even use an entirely different language like JavaScript to interact with the model. In the next section, we’ll use Flask to build out a web server that will grant us much more flexibility.

Flask

Let’s start by creating a directory of folders and files like this:

1

2

3

4

5

6

7

8

9

spamCatch

| app.py

| static/

| __init__.py

| data/

| data.csv

| python/

| __init__.py

| spam_catcher.py

In short, app.py is at the top level, with everything else inside the static folder. static and static/python both have __init__ files, which let us load SpamCatcher more easily.[3] The __init__ file in static looks like this:

| Python |

1

from .python import *

And the __init__ file in static/python looks like this:

| Python |

1

from .spam_catcher import SpamCatcher

Now let’s turn to app.py. This file will contain the central “engine” of our app, which we’ll call app. We’ll begin by loading Flask and jsonify from the flask library, loading and instantiating SpamCatcher, and creating our application.

| Python |

1

2

3

4

5

6

7

from flask import Flask, jsonify

from static import SpamCatcher

sc = SpamCatcher()

sc.load_and_train("data/data.csv")

app = Flask(__name__)

We then define our endpoints, or the locations where a user can go to to interact with some code. Our final app will have a few extra endpoints that serve user-friendly HTML pages, but for now, let’s only create the endpoint that classifies a string of text sent to it.[4]

| Python |

1

2

3

@app.route("/classify/<string:text>")

def classify(text):

return jsonify(sc.classify_string(text))

The @app.route decorator contains a URL (/classify/) with a variable (<text>) that we’re specifying is a string. text is passed into the decorated function classify, which sends it to the classify_string method of our instance of SpamCatcher. Finally, we need to convert the resulting float to a JSON, which we do with jsonify.

Finally, we add the following code to the bottom of app.py. This will actually turn on the server when we’re ready.

| Python |

1

2

if __name__ == "__main__":

app.run(debug=True, port=5000)

So all together, app.py looks like this:

| Python |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

from flask import Flask, jsonify

from static import SpamCatcher

sc = SpamCatcher()

sc.load_and_train("data/data.csv")

app = Flask(__name__)

@app.route("/classify/<string:text>")

def classify(text):

return jsonify(sc.classify_string(text))

if __name__ == "__main__":

app.run(debug=True, port=5000)

Flask summary

Congrats, we now have an app! Navigate to the spamCatch directory in a Terminal window, then type the following to launch our app.

| bash |

1

python app.py

Launching the app means that we turn on a little engine that listens for and responds to HTTP requests at 127.0.0.1:5000. 127.0.0.1, also called localhost, is the IP address that refers to your own computer. 5000 is a port on your computer.

Now for the exciting bit. Open up a Jupyter notebook or Python script anywhere on your computer, and run this:

| Python |

1

2

3

4

5

6

import requests

url = "http://localhost:5000/classify/"

text = "Urgent! Send money: www.bank.com"

print(requests.get(url+text).json()) # 0.76

Tada! If you’re paying attention to the Terminal window where you launched your app, you can see the following log that indicates a GET request was placed at your /classify endpoint, and that Flask successfully responded without errors (200).

| bash |

1

2

INFO:werkzeug:127.0.0.1 - - [13/Mar/2021 21:03:41]

"GET /classify/Urgent!%20Send%20money:%20www.bank.com HTTP/1.1" 200 -

Conclusions

In the first post in this series, we covered the theory for how to build a classifier to identify spam. In this post, we then built this classifier and created a tiny Flask app to make it easy to interact with the classifier anywhere on our computer.

Because our model is accessible through Flask, we’ve opened up a world of possibilities for customizing how users interact with our app. In the next post, we’ll design a fun frontend so users can interact with the model in a browser rather than in Python. We’ll then finally get our app off our computer and onto the internet for others to play with. See you there!

Best,

Matt

Footnotes

1. The TF-IDF vectorizer

We’re assuming the documents being passed in are our training set if self.tfidf_vectorizer hasn’t been fulfilled. In a production context, it’d be safer to have an explicit train_vectorizer function so it’s harder to accidentally train the vectorizer on documents you didn’t intend to.

2. Classifier summary

The exact percentages you get will change every time you retrain the model, even on the same data, due to the randomness in which trees get which bootstrapped subsets of data. You’ll also only get whole percentages for every prediction because our random forest has 100 trees, and the final prediction is the number of trees that conclude that the string is spam. We use round in our example to avoid potential floating-point errors.

3. Flask

Here’s a 30-second demo of the value of __init__ files. Imagine you have your code organized in a directory like this:

1

2

3

4

5

src/

| __init__.py

| classifiers/

| __init__.py

| spam_catcher.py

You’re in a Python file outside this directory. Without the __init__ files, to load SpamCatcher from spam_catcher.py, you would need to type this:

1

from src.classifiers.spam_catcher import SpamCatcher

But with the __init__ files, you can just type this:

1

from src import SpamCatcher

Not only is this much more convenient, it prevents hours of headaches if you ever rearrange folders within your project $-$ any code importing classes from your project automatically redirects to the correct location.

4. Flask

I wasn’t sure whether I’d be able to get away with GET requests for this app. Could I really just store the text to classify in the URL itself? Would the model get confused by spaces getting converted to %20, for example? Fortunately, it turned out to be a total non-issue.